AI with Model Context Protocol

Last November, Anthropic introduced the Model Context Protocol (MCP), a new open standard for connecting language models to other external data sources. The goal of this protocol is to make artificial intelligence models perform better and interact better with real-time data.

Although large language models can process information at an amazing rate, they have had problems accessing data in real time. A perfect example of this is when we ask an AI model to write content on a particular topic, but it ends up missing important information because it cannot directly access this real-time information.

Anthropic decided to solve this problem by improving the functionality of these models by making them more flexible, context-aware, and intelligent. In other words, having a standardized infrastructure for connecting AI models to other tools and information sources allows AI systems to access and use information in different contexts.

MCP has become so popular that major technology companies such as Block (formerly Square) and many others have implemented this protocol since its launch. Since then, more than a thousand open-source connectors have been created. The popularity of MCP is attributed to its potential to solve important problems related to AI use, such as reproducibility and standardization.

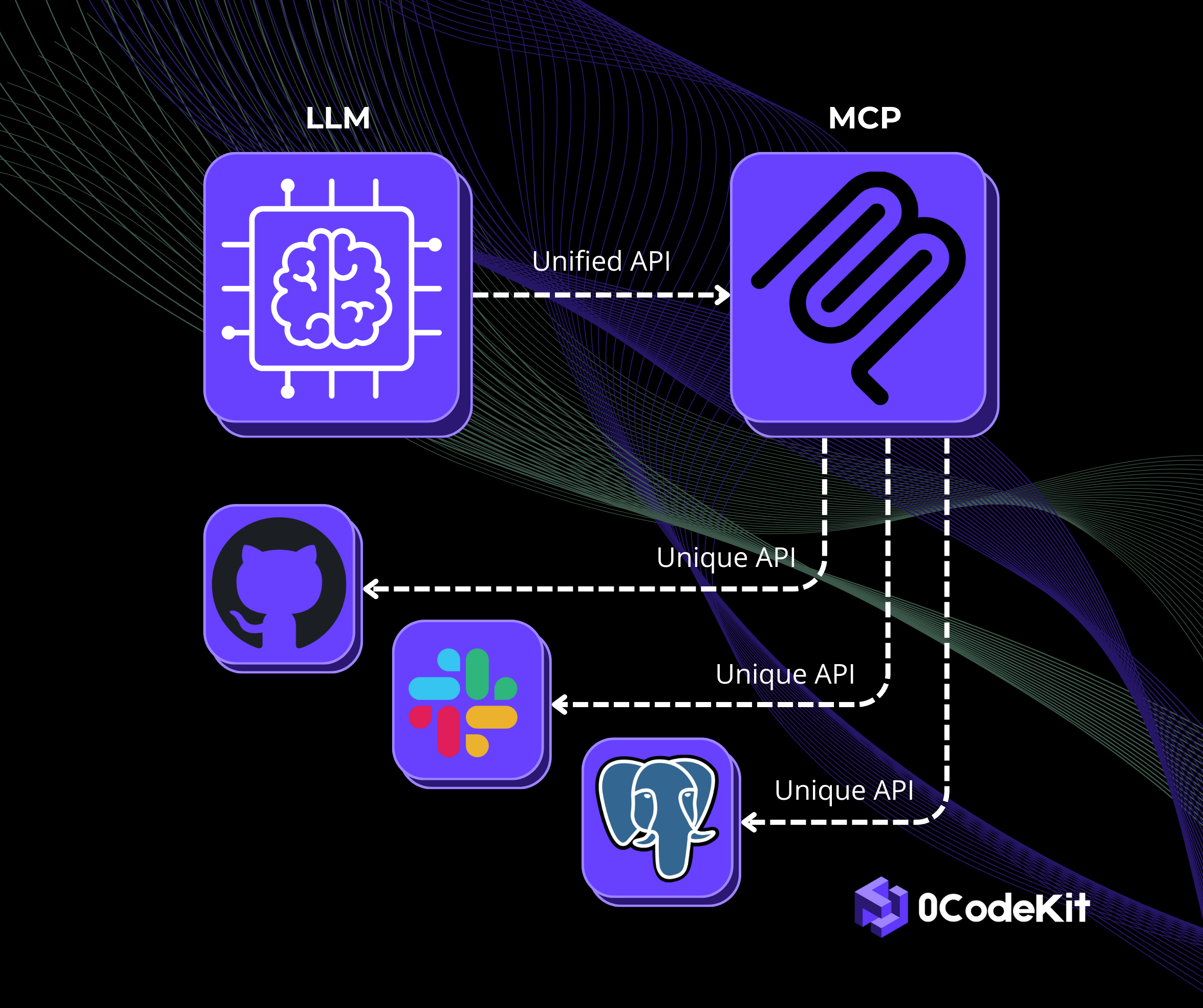

This is how it works: MCP establishes a client and server architecture. In this case, the AI systems are the clients, and they request important context from data repositories or other platforms from the servers. Fortunately, this eliminates the need to create and maintain individual integrations with each separate data source.

There are 3 important roles of dentures in the MCP architecture:

Anthropic establishes the relationship between server-side and client-side applications with 3 primitives: